Making the video stream intelligent

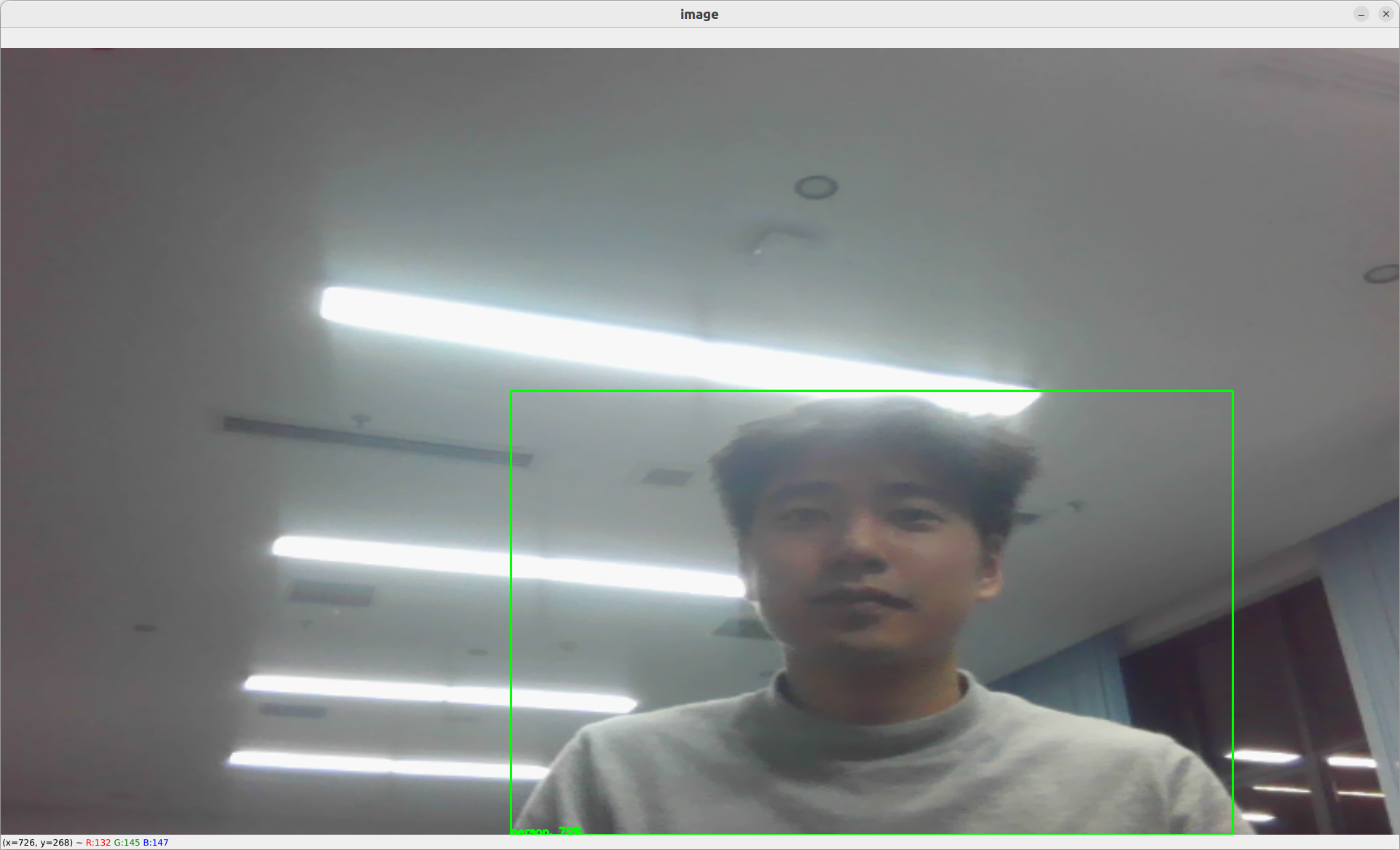

Let's add a yolov5 object detection operator that has already been written for us in ./operators/yolov5_op.py. This will help us detect object as bounding boxes within the webcam stream.

# operators/yolov5_op.py

"""

# Yolov5 operator

`Yolov5` object detection operator generates bounding boxes on images where it detects object.

More info here: [https://github.com/ultralytics/yolov5](https://github.com/ultralytics/yolov5)

`Yolov5` has not been finetuned on the simulation and is directly importing weight from Pytorch Hub.

In case you want to run `yolov5` without internet you can clone [https://github.com/ultralytics/yolov5](https://github.com/ultralytics/yolov5) and download the weights you want to use from [the release page](https://github.com/ultralytics/yolov5/releases/tag/v7.0) and then specify within the yaml graph the two environments variables:

- `YOLOV5_PATH: YOUR/PATH`

- `YOLOV5_WEIGHT_PATH: YOUR/WEIGHT/PATH`

You can also choose to allocate the model in GPU using the environment variable:

- `PYTORCH_DEVICE: cuda # or cpu`

## Inputs

- image as 1920x1080xBGR array.

## Outputs

- Bounding box coordinates as well as the confidence and class label as output.

## Graph Description

```yaml

- id: yolov5

operator:

outputs:

- bbox

inputs:

image: webcam/image

python: ../../operators/yolov5_op.py

Graph Visualisation

flowchart TB oasis_agent subgraph yolov5 yolov5/op[op] end subgraph obstacle_location_op obstacle_location_op/op[op] end oasis_agent -- image --> yolov5/op yolov5/op -- bbox as obstacles_bbox --> obstacle_location_op/op

"""

import os from typing import Callable

import numpy as np import pyarrow as pa import torch from dora import DoraStatus

pa.array([]) # See: https://github.com/apache/arrow/issues/34994 IMAGE_WIDTH = 1920 IMAGE_HEIGHT = 1080 DEVICE = os.environ.get("PYTORCH_DEVICE") or "cpu" YOLOV5_PATH = os.environ.get("YOLOV5_PATH") YOLOV5_WEIGHT_PATH = os.environ.get("YOLOV5_WEIGHT_PATH")

class Operator:

"""

Send bbox found by YOLOv5 on given image

"""

def __init__(self):

if YOLOV5_PATH is None:

# With internet

self.model = torch.hub.load(

"ultralytics/yolov5",

"yolov5n",

)

else:

# Without internet

#

# To install:

# cd $DORA_HOME_DEP/dependecies # Optional

# git clone https://github.com/ultralytics/yolov5.git

# rm yolov5/.git -rf

# Add YOLOV5_PATH and YOLOV5_WEIGHT_PATH in your YAML graph

self.model = torch.hub.load(

YOLOV5_PATH,

"custom",

path=YOLOV5_WEIGHT_PATH,

source="local",

)

self.model.to(torch.device(DEVICE))

self.model.eval()

def on_event(

self,

dora_event: dict,

send_output: Callable[[str, bytes], None],

) -> DoraStatus:

if dora_event["type"] == "INPUT":

return self.on_input(dora_event, send_output)

return DoraStatus.CONTINUE

def on_input(

self,

dora_input: dict,

send_output: Callable[[str, bytes], None],

) -> DoraStatus:

"""

Handle image

Args:

dora_input["id"] (str): Id of the input declared in the yaml configuration

dora_input["value"] (arrow.array (UInt8)): Bytes message of the input

send_output (Callable[[str, bytes]]): Function enabling sending output back to dora.

"""

if dora_input["id"] == "image":

frame = (

dora_input["value"]

.to_numpy()

.reshape((IMAGE_HEIGHT, IMAGE_WIDTH, 4))

)

frame = frame[:, :, :3]

results = self.model(frame) # includes NMS

arrays = np.array(results.xyxy[0].cpu())[

:, [0, 2, 1, 3, 4, 5]

] # xyxy -> xxyy

arrays[:, 4] *= 100

arrays = arrays.astype(np.int32)

arrays = pa.array(arrays.ravel().view(np.uint8))

send_output("bbox", arrays, dora_input["metadata"])

return DoraStatus.CONTINUE

> Operators are composed of:

>

> `__init__` methods that help create the object.

>

> `on_event` methods that is called when an event is received.

> There is currently 4 event types:

> - `STOP`: meaning that the operator was signalled to stop.

> - `INPUT`: meannig that an input was received.

> - You can use `dora_event['id']`, to get the id.

> - You can use `dora_event['data']`, to get the data.

> - You can use `dora_event['meatadata']`, to get the metadata.

> - `INPUT_CLOSED`: meannig that an input source was closed. This could be useful if the input is critical for the well behaviour of the operator.

> - `ERROR`: meaning that error message was received.

> - `UNKNOWN`: meaning that an unknown message was received.

>

> We have encapsulated `input` event in a `on_input` method but this is not required.

To add an operator within the dataflow. You need to explicit what the input and output are. You can reference node by their ids:

```yaml

# graphs/tutorials/webcam_yolov5.yaml

nodes:

- id: webcam

operator:

python: ../../operators/webcam_op.py

inputs:

tick: dora/timer/millis/100

outputs:

- image

env:

DEVICE_INDEX: 0

- id: yolov5

operator:

outputs:

- bbox

inputs:

image: webcam/image

python: ../../operators/yolov5_op.py

- id: plot

operator:

python: ../../operators/plot.py

inputs:

image: webcam/image

obstacles_bbox: yolov5/bbox

In this case, we have connected the webcam/image output to the image input of yolov5. yolov5/bbox is then connected to the plot/obstacles_bbox.

Inputs are prefixed by the node name to be able to separate name conflicts.

To run:

dora up

dora start graphs/tutorials/webcam_yolov5.yaml --attach

For more information on

yolov5, go on ouryolov5detail page